Although initially one might think that the Content Security Policy(CSP) set to self will defeat XSS then depending on the web app, it might not be the case. Depending on how well the application is written there still might be ways to get around it.

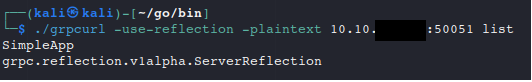

When playing around on HTB I came across a machine where the initial foothold was designed to be gained via cross-site scripting(XSS) against a “bot user” on a website. I saw that when editing a field in a order form on my own user I could successfully ender a XSS payload, yet it got blocked by the CSP. As it was set to self, making the web browser reject inline Java Script. After looking around a bit on the page and discovering it’s features I noticed that I can actually upload a profile picture. After discovering that I decided to try and see if there are any restrictions on files that can be uploaded as my avatar. Lucky for me there weren’t any and I successfully uploaded a Java Script file as my profile image.

After having uploaded the malicious Java Script payload as my profile image I could get XSS to trigger on my own order forms. It was just a matter of pointing the inline Java Script to include its source from my profile image.

<script src="http://ctftarget.htb/static/profile/1"></script> After that it was just a matter of finding out how to feed that XSS to the bot user, which turned out to be easy – update the bots order form as permissions were broken.

So when you come across restrictive CSP look for other functionalities which might help you reach your goal. And like always use your skills wisely/legally/responsibly.